One of the strangest — and perhaps most dangerous — nuclear tests ever conducted was Operation ARGUS, in late 1958.

The basic idea behind them was proposed by the Greek physicist Nicholas Christofilos, then at Livermore. If you shot a nuclear warhead off in the upper atmosphere, Christofilos argued, it would create an artificial radiation field similar to the Van Allen radiation belts that surround the planet. In essence, it would create a “shell” of electrons around the planet.

The tactical advantage to such a test is that hypothetically you could use this knowledge to knock out enemy missiles and satellites that were coming in. So they gave it a test, and indeed, it worked! (With some difficulty; it involved shooting nuclear weapons very high into the atmosphere on high-altitude rockets off of a boat in the middle of the rough South Atlantic Ocean. One wonders what happened to the warheads on them. They also had some difficulty positioning the rockets. The video linked to discusses this around the 33 minute point. Also, around the 19 minute mark is footage of various Navy equator-crossing hazing rituals, with pirate garb!)

It created artificial belts of electrons that surrounded the planet for weeks. Sound nutty yet? No? Well, just hold on — we’ll get there.

(Aside: Christofilos is an interesting guy; he had worked as an elevator repairman during World War II, studying particle physics in his spare time. He independently came up with the idea for the synchrotron and eventually was noticed by physicists in the United States. He later came up with a very clever way to allow communication with submerged submarines deep under water which was implement in the late 20th century.)

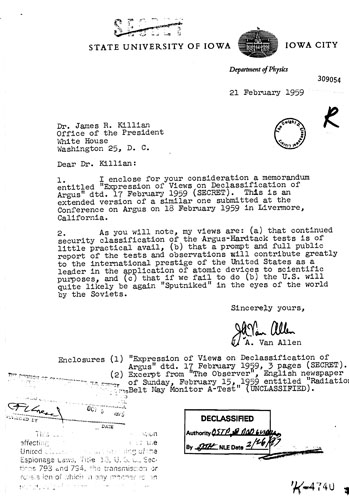

In early 1959 — not long after the test itself — none other than James Van Allen (of the aforementioned Van Allen radiation belts) argued that the United States should rapidly declassify and release information on the Argus experiment. 1

Van Allen wanted it declassified because he was a big fan of the test, and thought the US would benefit from the world knowing about it:

As you will note, my views are (a) that continued security classification of the Argus-Hardtack tests is of little practical avail, (b) that a prompt and full public report of the tests and observations will contribute greatly to the international prestige of the United States as a leader in the application of atomic devices to scientific purposes, and (c) that if we fail to do (b) the U.S. will be quite likely be again ‘Sputniked’ in the eyes of the world by the Soviets.

Basically, Van Allen argued, the idea of doing an Argust-type experiment was widely known, even amongst uncleared scientists, and that the Soviets could pull off the same test themselves and get all the glory.

But here’s the line that makes me cringe: “The U.S. tests, already carried out successfully, undoubtedly constitute the greatest geophysical experiment ever conducted by man.”

This was an experiment that affected the entire planet — “the greatest geophysical experiment ever conducted by man” — that were approved, vetted, and conducted under a heavy, heavy veil of secrecy. What if the predictions had been wrong? It’s not an impossibility that such a thing could have been the case: the physics of nuclear weapons are in a different energy regime than most other terrestrial science, and as a result there have been some colossal miscalculations that were only revealed after the bombs had gone off and, oh, contaminated huge swathes of the planet, or, say, accidentally knocked out satellite and radio communications. (The latter incident linked to, Starfish-Prime, was a very similar test that did cause a lot of accidental damage.)

There’s some irony in that the greatest praise, in this case, is a sign of how spooky the test was. At least to me, anyway.

This is the same sort of creepy feeling I get when I read about geoengineering, those attempts to purposefully use technology to affect things at the global scale, now in vogue again as a last-ditch attempt to ameliorate the effects of climate change. It’s not just the hubris — though, as an historian, that’s something that’s easy to see as an issue, given that unintended consequences are ripe even with technologies that don’t purposefully try to remake the entire planet. It’s also the matter of scale. Something happens when you go from small-scale knowledge (produced in the necessarily artificial conditions that laboratory science requires) to large-scale applications. Unpredicted effects and consequences show up with a vengeance, and you get a rapid education in how many collective and chaotic effects you do not really understand. It gives me the willies to ramp things up into new scales and new energy regimes without the possibility of doing intermediate stages.

(Interestingly, my connection between Argus and geoengineering has been the subject of at least one talk by James R. Fleming, a space historian at Colby College, who apparently argued that Van Allen later regretted disrupting the Earth’s natural magnetosphere. Fleming has a paper on this in the Annals of Iowa, but I haven’t yet tracked down a copy.)

As for Argus’s declassification: while the Department of Defense was in the process of declassifying Argus, per Van Allen’s recommendations, they got a call from the New York Times saying that they were about to publish on it. (The Times claimed to have known about Argus well before the tests took place.) It’s not clear who leaked it, but leaked it did. The DOD decided that they should declassify as much as they could and send it out to coincide with this, and the news of Argus hit the front pages in March 1959.

- Citation: James Van Allen to James R. Killian (21 February 1959), copy in the Nuclear Testing Archive, Las Vegas, NV, document NV0309054.[↩]

1. I enclose for your consideration a memorandum entitled “Expression of Views on Declassification of Argus” dtd. 17 February 1959 (SECRET).

This cover letter itself is stamped “SECRET.”

How did a professor in Iowa City convey secret documents to the White House in 1959?

I presume he wouldn’t have just dropped them in the mail. Was there a military courier service he could use? Would he simply have to travel to Washington himself?

Well, Van Allen did have a security clearance, so he would have had access to that whole world of possibilities. I believe a SECRET document at that time could have been sent through registered mail if there was no other way to get it there (TOP SECRET would have required a dedicated courier). I have the Cold War regulations somewhere; I can look them up.

Another darkly humorous aspect of this history is that Van Allen’s discovery of the Earth’s radiation belts (in early 1958) was taken by several people as evidence that the Soviets had beaten us to detonating an atomic weapon in space (see Discovery of the Earth’s Magnetosphere, Gillmor and Spreiter, eds.) And, in fact, it was only through observations of the Argus blasts that Van Allen was able to conclude that the radiation detected by Explorers I and III could not have resulted from a Soviet explosion. The fallout from Argus only lasted about 3 weeks, whereas the natural radiation belts were, to everyone’s surprise, a permanent feature. And, since I know you enjoy these things, the record for longest-lived artificial radiation belt goes to Starfish (of course) whose fallout was observed in space nearly 2 years after its detonation.

Thanks, Alex! I wasn’t aware of the early misunderstanding regarding the Van Allen belts themselves. Starfish Prime was an amazing bungle — it’s not every day you unleash an EMP attack on Hawaii and knock out six satellites (including a few of your own). This is what I mean about the scariness of scaling up.

A significant factor in staging ARGUS and in its classification was the need to establish the feasibility of detecting nuclear explosions in near space. Remember that this period of time saw several scientific controversies raging over the enforceability of a test ban agreement. Those who resisted the idea of a test ban proposed ever more outlandish and impractical ways that they presumed the Soviets would pursue to hide testing from the US. These including Albert Latter’s decoupling theory and the possibility that the USSR might try to conceal a space test on the far side of the Moon.

Obviously, ARGUS established that it WAS possible to detect such an explosion in near space.

The fallout from ARGUS also likely lasted longer than 3 weeks. Consider the fallout generated from the lower burst altitudes of ORANGE and TEAK, both of which were salted with specific isotopes in order to establish the time scale of fallout’s return to lower altitudes, which continued for a considerable period of time stretching into years.

As for planet-scale experiments, ARGUS was simply a case of raising the stakes of what was already underway. I argue in my forthcoming dissertation that fallout from atmospheric testing was itself a global scale experiment, one even more troubling than ARGUS’s potential for havoc. Consider that the global fallout generated by testing made a laboratory of the entire planet’s ecosystem, with fallout being found virtually everywhere in the environment by the late 1950s.

Atmospheric testing was effectively the largest scale experiment ever conducted on unconsenting test subjects by dint of exposing the Earth’s entire population to fallout. Given the post-Nuremberg sensitivity to the use of unconsenting subjects for scientific research, this demonstrates how nuclear weapons research was often effectively given carte blanche to ignore a variety of ethical considerations under the circumstances of the Cold War.

The impending test ban agreement also motivated the speed at which the military moved. The operation was only approved in May, and they were already launching at the end of August, much more quickly than the 18+ months of planning that went into most tests.

In regards to interfering with the environment, after the second nuke was launched and the military was in the right place to observe the effects, Van Allen argues they shouldn’t launch any more. Not because he was concerned about altering the environment, but because it made the experiment worse. He argued that they would just be ruining the data to add another artificial belt on top the first ones. Obviously the military (I believe with Christofilos’ urging) disagreed.

In reply to Mike Lehman’s comment, it’s likely I’m abusing the term “fallout”. What I wanted to convey was that charged particles from Argus remained in trapped orbits in the Earth’s magnetosphere for about 2-3 weeks. My source for this is HESS, W.N., The Radiation Belt and Magnetosphere, Blaisdell, Waltham, MA, 1968, chapter 5. Argus was only a few kilotons, but high-altitude (200 – 500 km); Orange and Teak were megaton blasts, but they were so low (< 80 km) that they would not have produced any magnetically trapped radiation. By my count, in all of US and Soviet testing, only 7 blasts resulted in artificial radiation belts (with Starfish being the champion).

In regards to the claim that atmospheric testing was the largest scale experiment ever conducted on unconsenting test-subjects, what shall we make of the fact that humans have been sending toxins into the atmosphere since the beginning of civilization? For Mike's dissertation, I think it would be very interesting to address how radioactive fallout is similar to and different from, say, the products of iron-smelting that can be detected in Greenland's ice-cores dating back to Roman times.

@Mike and @Alex Boxer — Both of these are really interesting perspectives on the question of “experimentation.”

I wonder if it matters that in the case of nuclear testing, we’re talking about a government program as opposed to private activity? I think Mike’s point is that democratically elected leaders (in the case of the US) put national security well ahead of public health in the case of atmospheric testing, and that by itself is a moral detriment. Alex B.’s point that the nuclear contamination is hardly the only — or even the predominant — contamination in the world is a good one to keep us from getting too exceptional when thinking about nukes. But in most cases the contamination Alex is referring to is put out there by private individuals — although the fact that the government ostensibly ought to be regulating that does make them culpable to some degree (at least, I think an ethicist would think so).

Anyway, these are interesting questions.

Alex,

I’m not sure a distinction in the legal standing of the source of contamination, whether public or private, is all that helpful here. However, I believe that there is a clear case that governments have broad responsibility for the health and welfare of their citizens, although their obligations to non-citizens are less clear. That’s one of the ironies of nuclear weapons. They were a threat to those who would use them, as well to those targeted.

The question of whether or not generation of fallout, an inherent and inevitable by-product of any uncontained nuclear explosion despite the high hopes of Lewis Strauss and Edward Teller in projecting the myth of “clean” weapon designs, was an intentional experiment is somewhat hazy in terms of the archival record. Is there a document somewhere that states, “We intend to contaminate the global environment with radioactive fallout in order to conduct a comprehensive study of its effects”? Probably not.

One of my theoretical approaches is the concept of infrapolicy. An element I use to define infrapolicy is that policies sometimes effectively exist because of omission, i.e. organizations overlook certain aspects and cherry-pick other aspects at issue when addressing problems, often preferring to overlook troubling issues in advancing toward a goal. For example here, there was the certification that what became the Nevada Test Site would not pose an undue risk to the public by those who were at the same time responsible for helping set national radiation exposure standards. A seemingly massive conflict of interest, but just the way things were done back then.

The AEC and Pentagon were very interested in the health effects of high-level radiation exposures, i.e. those that might cause imminent incapacitation of a soldier, and did extensive research in this area. On the other hand, the AEC was decidedly less interested in the effects of low-level radiation exposures and didn’t effectively pursue exposure off-site from testing in Nevada until various parties raised concerns.

The military took a different approach. They were much like the AEC in terms of research interest in the health effects of radiation exposure, with one big exception: the use of fallout for intelligence purposes. Ziegler and Jacobson’s 1995 Spying without Spies does an excellent job of outlining the systematic experimental approach used by the Air Force to develop the Atomic Energy Detection System (AEDS). If you want to discover how to detect someone else’s nuclear test, you use your own as the test subject. Thus, virtually every postwar test served a variety of experimental needs to develop the use of fallout for intelligence purposes. From the beginning, it was realized that a global scale system was required. By the time that the USSR detonated their first test article in late August 1949, the AEDS detected it within a few days and confirmed it as a nuclear explosion whose origin was with the USSR.

That’s where Z&J leave off and I pick up. Sure, the US didn’t intend to contaminate the global ecosphere. The concept itself wasn’t yet fully formed during the early Cold War, although the potential risks posed by atmospheric nuclear testing were quickly recognized and became part of the inspiration for Rachel Carson’s 1962 Silent Spring, as well as for an upsurge in transnational public opinion that became a surprisingly strong force in bringing seemingly implacable enemies to the table to forge an agreement to end atmospheric testing just 14 short years after the Soviets joined the nuclear club.

However, it is clear that the US government was very much aware of the global spread of fallout quite early on, collected extensive data as part of an experimental regime connected to this spread, and largely disregarded the consequential contamination that resulted.

Now, if a biological research lab — government, private or university — experimented with a lethal pathogen and failed to take action to prevent its spread outside the lab, that act of omission would be considered as questionable from several ethical positions. But it would be clear that whatever the original intent of the experiment was, it would now involve a considerable number of unconsenting test subjects brought into it inadvertently by a failure to act to prevent such a circumstance.

Such behavior would be very troubling, but whether it was such an hypothetical example or the very real consequences of Cold War nuclear testing, I don’t believe either is ethically exactly equivalent to what the Nazis did. Perhaps my invocation of Nuremberg was a bit too strong. However, with the lessons of Nuremberg absorbed, one can’t help but note that the grey area between intentional policy and infrapolicy is much narrower than I think most can be comfortable with in retrospect.

Contributing to such unease is the fact that the Pentagon is still sitting on the most accurate and extensive data on fallout distribution, despite its potentially crucial contribution to better defining the health and environmental impacts of fallout from testing. Oddly, I doubt there’s anything revelatory of current sources and methods that isn’t well-known at the IAEA, for instance. The CDC and National Cancer Institute noted in a 2001 report that the absence of this highest quality data prevents a better estimate of the actual impacts of low-level radiation exposures due to fallout. One could fathom many reasons why this data is still off-limits to researchers, but the one that seems to fit best with past behavior is that the government wants the clock to run out for those alive and exposed during those years. On the other hand, the data might also show that fallout really wasn’t that big of a deal. Whichever it is, someone apparently feels it’s not worth the risk to expose it to objective evaluation.

One curiosity this situation does raise is if the government has responsibility for potentially affecting the health of the entire US population, isn’t that an interesting argument for national health care…? Much of that stuff is not going away any time soon, as the discovery that plutonium detected around Fukushima and initially feared to indicate a meltdown of the MOX reactor (3#?) mostly turned out to be leftover Cold War fallout. The experiment isn’t over, but I digress.

[…] you so far. The only atomic testing (that we know of) the military conducted in the Atlantic was Operation Argus in 1958, in which the Navy fired missiles into the upper atmosphere in an attempt to create […]