The history of nuclear secrecy is an interesting topic for a lot of reasons, but one of the more wonky ones is that it is an inversion of the typical studies that traditionally are done in the history of science. The history of science is usually a study of how knowledge is made and then circulates; a history of secrecy is about how knowledge is made and then is not circulated. Or, at least, its non-circulation is attempted, to various degrees of success. These kinds of studies are still not the “norm” amongst historians of science, but in recent years have become more common, both because historians have come to understand that secrecy is often used by scientists for various “legitimate” reasons (i.e., preserving priority), and because historians have come to understand that the study of deliberately-created ignorance has been a major theme as well (e.g., Robert Proctor has coined the term agnotology to describe the deliberate actions of the tobacco industry to foster ignorance and uncertainty regarding the link between lung cancer and cigarettes).

The USS Nautilus with a nice blob of redaction. No reactor for you! From a 1951 hearing of the Joint Committee on Atomic Energy — apparently the reactor design is still secret even today?

What I find particularly interesting about secrecy, as a scholar, is that it is like a sap or a glue that starts to stick to everything once you introduce a little bit of it. Try to add a little secrecy to your system and pretty soon more secrecy is necessary — it spreads. I’ve remarked on this some time back, in the context of Los Alamos designating all spheres as a priori classified: once you start down the rabbit-hole, it becomes easier and easier for the secrecy system to become more entrenched, even if your intentions are completely pure (and, of course, more so if they are not).

In this vein, I’ve for awhile been struck by the work of some friends of mine in the area of arms control work known as “zero-knowledge proofs” (and the name alone is an attention-grabber). A zero-knowledge proof is a concept derived from cryptography (e.g., one computer proves to another that it knows a secret, but doesn’t give the secret away in the process), but as applied to nuclear weapons, it is roughly as follows: Imagine a hypothetical future where the United States and Russia have agreed to have very low numbers of nuclear warheads, say in the hundreds rather than the current thousands. They want mutually verify each other’s stockpiles are as they say they are. So they send over an inspector to count each other’s warheads.

Already this involves some hypotheticals, but the real wrench is this: the US doesn’t want to give its nuclear design secrets away to the Russian inspectors. And the Russians don’t want to give theirs to the US inspectors. So how can they verify that what they are looking at are actually warheads, and not, say, steel cans made to look like warheads, if you can’t take them apart?

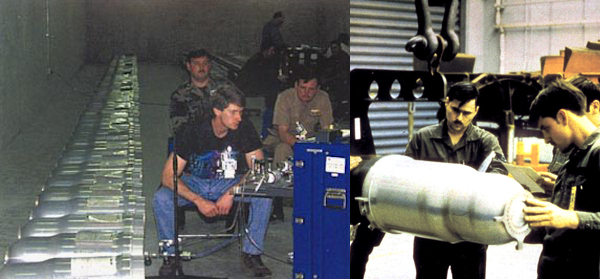

Let’s imagine you had a long line of purported warheads, like the W80, shown here. How can you prove there’s an actual nuke in each can, without knowing or learning what’s in the can? The remarkable W80s-in-a-bunker image is from a blog post by Hans Kristensen at Federation of American Scientists.

Now you might ask why people would fake having warheads (because that would make their total number of warheads seem higher than it was, not lower), and the answer is usually about verifying warheads put into a queue for dismantlement. So your inspector would show up to a site and see a bunch of barrels and would be told, “all of these are nuclear warheads we are getting rid of.” So if those are not actually warheads then you are being fooled about how many nukes they still have.

You might know how much a nuclear weapon ought to weigh, so you could weigh the cans. You might do some radiation readings to figure out if they are giving off more or less what you expect a warhead might be giving you. But remember that yours inspector doesn’t actually know the configuration inside the can: they aren’t allowed to know how much plutonium or uranium is in the device, or what shapes it is in, or what configuration it is in. So this will put limitations both on what you’re allowed to know beforehand, and what you’re allowed to measure.

Now, amusingly, I had written all of the above a few weeks ago, with a plan to publish this issue as its own blog post, when one of the groups came out with a new paper and I was asked whether I would write about it for The New Yorker‘s science/tech blog, Elements. So you can go read the final result, to learn about some of the people (Alexander Glaser, Sébastien Philippe, and R. Scott Kemp) who are doing work on this: “The Virtues of Nuclear Ignorance.” It was a fun article to write, in part because I have known two of the people for several years (Glaser and Kemp) and they are curious, intelligent people doing really unusual work at the intersection of technology and policy.

I won’t re-describe their various methods of doing it here; read the article. If you want to read their original papers (I have simplified their protocols a bit in my description), you can read them here: the original Princeton group paper (2014), the MIT paper from earlier this year (2016), and the most recent paper from the Princeton group with Philippe’s experiment (2016).

In the article, I use a pine tree analogy to explain the zero-knowledge proof. Kemp provided that. There are other “primers” on zero-knowledge proofs on the web, but most of them are, like many cryptographic proofs, not exactly intuitive, everyday scenarios. One of the ones I considered using in the article was a famous one regarding a game of Where’s Waldo:

Imagine that you and I are looking at a page in one book of Where’s Waldo. After several minutes, you become frustrated and declare that Waldo can’t possibly be on the page. “Oh, but he is,” I respond. “I can prove it to you, but I don’t want to take away the fun of you finding him for yourself.” So I get a large piece of paper and cut out a tiny hole in exactly the shape of Waldo. While you are looking away, I position it so that it obscures the page but reveals the striped wanderer through the hole. That is the essence of a zero-knowledge proof — I prove I’m not bluffing without revealing anything new to you.

I found Waldo in the Battle of Troy. How can I prove it without giving his location away? A digital version of the described “proof”: I found his little head and cut it out with Photoshop. In principle, you now know I really found him, without knowing where he is… but might that face be from a different Waldo page? (Image from Where’s Waldo)

But a true zero-knowledge proof, though, would also avoid the possibility of faking a positive result, which the Waldo example fails: I might not know where Waldo is on the page we are mutually looking at, but while you are not looking, I could set up the Waldo-mask on another page where I do know he is hiding. Worse yet, I could carry with me a tiny Waldo printed on a tiny piece of paper, just for this purpose. This might sound silly, but if there were stakes attached to my identification of Waldo, cheating would become expected. In the cryptologic jargon, any actual proof need to be both “complete” (proving positive knowledge) and “sound” (indicating false knowledge). Waldo doesn’t satisfy both.

Nuclear weapons issues have been particularly fraught by verification problems. The first attempt to reign in nuclear proliferation, the United States’ Baruch Plan of 1946, failed in the United Nations in part because it was clear that any meaningful plan to prevent the Soviet Union from developing nuclear weapons would involve a freedom of movement and inspection that was fundamentally incompatible with Stalinist society. The Soviet counter-proposal, the Gromyko Plan, was essentially a verification-free system, not much more than a pledge not to build nukes, and was subsequently rejected by the United States.

The Nuclear Non-Proliferation Treaty has binding force, in part, because of the inspection systems set up by the International Atomic Energy Agency, who physically monitor civilian nuclear facilities in signatory nations to make sure that sensitive materials are not being illegally diverted to military use. Even this regime has been controversial: much of the issues regarding Iran revolve around the limits of inspection, as the Iranians argue that many of the facilities the IAEA would like to inspect are militarily secret, though non-nuclear, and thus off-limits.

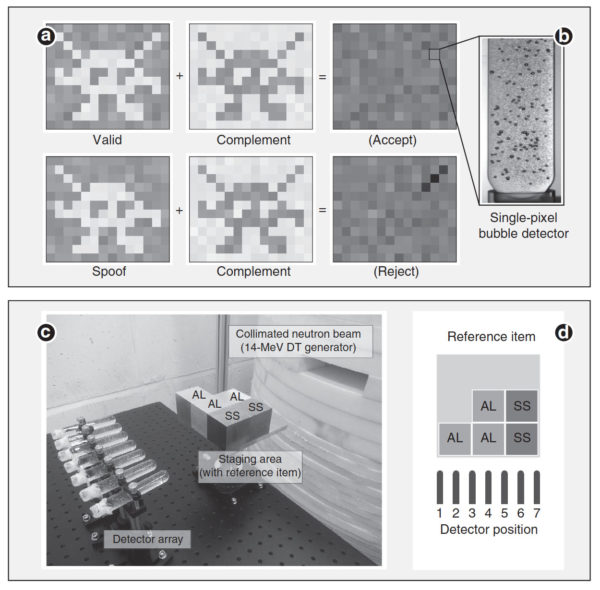

From the Nature Communications paper — showing (at top) the principle of what a 2D example would look like (with Glaser’s faux Space Invader) — the complement is the “preload” setting mentioned in my New Yorker article, so that when combined with the new reading, ought to result in a virtually null reading. At bottom, the setup of the proof-of-concept version, with seven detectors.

One historical example about the importance of verification comes from the Biological Weapons Convention in 1972. It contained no verification measures at all: the USA and USSR just pledged not to develop biological weapons (and the Soviets denied having a program at all, a flat-out lie). The United States had already unilaterally destroyed its offensive weapons prior to signing the treaty, though the Soviets long expressed doubt that all possible facilities had been removed. The US lack of interest in verification was partially because it suspected that the Soviets would object to any measures to monitor their work within their territory, but also because US intelligence agencies didn’t really fear a Soviet biological attack.

Privately, President Nixon referred to the BWC as a “jackass treaty… that doesn’t mean anything.” And as he put it to an aide: “If somebody uses germs on us, we’ll nuke ‘em.” 1

But immediately after signing the treaty, the Soviet Union launched a massive expansion of their secret biological weapon work. Over the years, they applied the newest genetic-engineering techniques to the effort of making whole new varieties of pathogens. Years later, after all of this had come to light and the Cold War had ended, researchers asked the former Soviet biologists why the USSR had violated the treaty. Some had indicated that they had gotten indications from intelligence officers that the US was probably doing the same thing, since if they weren’t, what was the point of a treaty without verification?

A bad verification regime, however, can also produce false positives, which can be just as dangerous. Consider Iraq, where the United States set up a context in which it was very hard for the Iraqi government to prove that it was not developing weapons of mass destruction. It was easy to imagine ways in which they might be cheating, and this, among other factors, drove the push for the disastrous Iraq War.

In between these extremes is the more political considerations: the possibility of cheating at treaties invites criticism and strife. It gives ammunition to those who would oppose treaties and diplomacy in general. Questions about verification have plagued American political discourse about the US-Iranian nuclear deal, including the false notion that Iran would be allowed to inspect itself. If one could eliminate any technical bases for objections, it has been argued, then at least those who opposed such things on principle would not be able to find refuge in them.

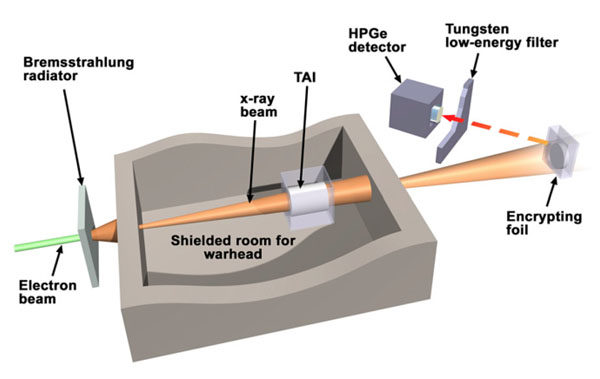

The setup from Kemp, et al. The TAI is the Treaty Accountable Item, i.e. the warhead you are testing.

This is where the zero-knowledge protocols could come in. What’s interesting to me, as someone who studies secrecy, is if the problem of weapon design secrecy were removed, then this whole system would be unnecessary. It is, on some level, a contortion: an elaborate work-around to avoid sharing, or learning, any classified information. Do American scientists really think the Russians have any warhead secrets that we don’t know, or vice versa? It’s possible. A stronger argument for continued secrecy is that there are ways that an enemy’s weapons could be rendered ineffective if their exact compositions were known (neutrons, in the right quantity, can “kill” a warhead, causing its plutonium to heat and expand, and causing its chemical high-explosives to degrade; if you knew exactly what level of neutrons would kill a nuke, it would play into strategies of trying to defend against a nuclear attack).

And, of course, that hypothetical future would include actors other than the United States and Russia: the other nuclear powers of the world are less likely to want to share nuclear warhead schematics with each other, and an ideal system could be used by non-nuclear states involved in inspections as well. But even if everyone did share their secrets, such verification systems might still be useful, because they would eliminate the need for trust altogether, and trust is never perfect.

A little postscript on the article: I want to make sure to thank Alex Glaser, Sébastien Philippe, and R. Scott Kemp for devoting a lot of their weekends to making sure I actually understood the underlying science of their work to write about it. Milton Leitenberg gave me a lot of valuable feedback on the Biological Weapons Convention, and even though none of that made it into the final article, it was extremely useful. Areg Danagoulian, a colleague of Kemp’s at MIT who has been working on their system (and who first proposed using nuclear resonance fluorescence as a means of approaching this question), didn’t make it into the article, but anyone seriously interested in these protocols should check out his work as well. And of course the editor I work with at New Yorker, Anthony Lydgate, should really get more credit than he does for these articles, and on this one in particular managed to take the unwieldy 5,000 word draft I sent him and chop it down to 2,000 words very elegantly. And, lastly, something amusing — I noticed that Princeton Plasma Physics Laboratory released a film of Sébastien talking about the experiment. Next to him is something heavily pixellated out… what could it be? It looks an awful lot like a copy of Unmaking the Bomb, a book created by Glaser and other Princeton faculty (and I made the cover), next to him…

- On the “jackass treaty,” see Milton Leitenberg and Raymon Zilinskas, The Soviet Biological Weapons Program: A History (Harvard University Press, 2012), quoted on 537. On “we’ll nuke ’em,” the aide was William Safire. For his account, see William Safire, “Iraq’s Tons of Germs,” New York Times (13 April 1995).[↩]

You mention here and in your New Yorker article that the U.S. doesn’t want to give away nuclear design secrets to the Russians, and vice versa. Is it your belief that by this point that restriction is just silly stubborn-mindedness and secrecy for secrecy’s sake? 65 years after Teller-Ulam, are there really likely to be vital design secrets that one side has and the other doesn’t? It’s my uninformed layman’s guess that small, efficient thermonuclear weapon designs are essentially commodity items by now for the major powers.

So this is a question I talked about with a few people at some length. There were a few categories of response:

1. Even if there weren’t really secrets to be held back, the culture of secrecy is such that it isn’t likely that secrecy will go away before warhead verification would be needed. And even if secrecy did go away, it’s still probably easier to come up with a method that doesn’t involve trusting anyone. So it’s a moot point, potentially.

2. Knowledge of the exact details of warhead innards could potentially help an adversary plan to render them inert in the case of actual war. Exactly details unclear, but it had to do with being able to estimate how much radiation you could deposit on a warhead to render its inner parts non-working or at least poorly-working. They prefer the uncertainty there.

3. While it isn’t really suspected that the US or Russia have big design secrets to keep from each other, there might be little “surprises” that they’d rather not share. And more importantly, if you are talking about including any other nations in this agreement, it quickly gets tricky — i.e., knowing the exact values of fissile materials in each warhead might set other nations on new design paths, etc.

Separately, one might consider this to be partially the difference between the declassification of a principle, and the declassification of a specific implementation. The idea of a stealth fighter is not classified, but many of the specifics of any active stealth fighter usually would be. Those who were arguing for eliminating bomb secrecy in the 1940s usually would draw this distinction as well: general principles of the technology should not be classified (because in principle they are available to any scientist), but specific implementations could be, because those involve engineering choices that in theory are less predictable and knowledge of which could allow the design of countermeasures.

But I do admit to sharing the feeling that all of this is a contortion around secrecy rules — that if secrecy was lessened, verification gets easier. But there is something to the idea that history has not borne that out as a very effective strategy with regards to the US and Russia.