Post archives

Filtering for posts tagged with ‘Atomic Energy Commission’

2022

December 2022

Meditations

2021

October 2021

Redactions

The untold story of the world's largest nuclear bomb, the Tsar Bomba, and the secret US efforts to match it.

June 2021

Redactions

A bizarre leak from a powerful Senator made the Top Secret debate over building the hydrogen bomb part of national discourse — and doomed its direction.

2019

December 2019

Redactions

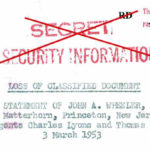

In 1953, an eminent US scientist lost the "secret of the H-bomb" under bizarre circumstances. Read the documents behind the tale.

2016

April 2016

Meditations

Inventing the bomb was hard. Maintaining the bomb was harder.

2015

September 2015

News and Notes

Richard Hewlett, the first official historian of the Atomic Energy Commission, has died at the age of 92.

March 2015

News and Notes | Redactions

The US government has once again created a headache for itself in trying to censor information about the hydrogen bomb.

January 2015

Redactions

What do the newly released Oppenheimer transcripts tell us about the security hearing, and its original redaction?

Redactions

In October 2014, the lost Oppenheimer security hearing transcripts were released. This is the story behind the story.

2013

November 2013

Meditations

A portrait of a year in flux, when the possibilities of a new order moved from the limitless to the concrete.

June 2013

Redactions | Visions

Making sense of the worst radiological accident in US history.

April 2013

Meditations | Redactions

Blacking something out is only a step away from highlighting its importance, and the void makes us curious.

January 2013

Visions

An investigation into the graphical history of the UN nuclear watchdog.

2012

September 2012

Redactions

What drove Edward Teller to push for a 10,000 megaton hydrogen bomb?

Redactions

In 1955, the U.S. Atomic Energy Commission experimentally answered the question none of us were asking: if you nuke beer, does it change the taste?

July 2012

Meditations

Reports from the annual meeting for the Society for Historians of American Foreign Relations: Farm Hall, David Lilienthal, Atoms for Peace.

June 2012

Redactions

Hans Bethe on why it was safe to declassify Project SUNSHINE, a study of the global effects of nuclear fallout.

Redactions

Why Hans Bethe wanted to postpone the test of the first hydrogen bomb in 1952.

Redactions

Hours after the first H-bomb was detonated, the press knew about it. But why did the government try to keep it secret for years after that?

Redactions

A new theory on why Joseph Rotblat left Los Alamos, and Groves' warning to the AEC that he had "doubts" about certain people on the project.

May 2012

Redactions

Gas centrifuges posed a tough problem for the US in the 1960s, perched in between fears of proliferation and the desires of industry.

Redactions

In 1946, scientists at the U. of Penn. attempted to publish a book about atomic bomb design. 60+ years later, here is the censored chapter.

April 2012

Meditations

In February 1951, the Atomic Energy Commission reported on the "pleasant" experience of rooting out a high-placed homosexual.

Visions

The origins of one of the most persistent totems of the atomic age.

March 2012

Redactions

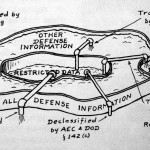

It should come as no surprise that so much of the work of secrecy is creating ever more baroque and detailed categorization schemes.

February 2012

Redactions

The story behind the broadcast of the first hydrogen bomb test -- two years after it happened.

January 2012

Meditations

A summary of a sticky historical issue: whether civilians or military personnel would physically control the atomic bomb.

Meditations

New nuclear tidbits from eight just-declassified secret transcripts of the Congressional committee charged with atomic oversight issues.

Redactions

On the agonizing compromises made when one brother is the country's top nuclear scientist, and the other is a former Communist Party member.

Visions

Is "leak" a good metaphor for unofficial information flow? Probably not.