Redaction is one of those practices we take for granted, but it is actually pretty strange if you think about it. I mean, who would imagine that the state would say, “well, all of this is totally safe for public consumption, except for a part right here, which is too awful to be legally visible, so I’ll just blot out that part. Maybe I’ll do it in black, maybe in white, maybe I’ll add DELETED in big bold letters, just so I know that you saw that I deleted it.”

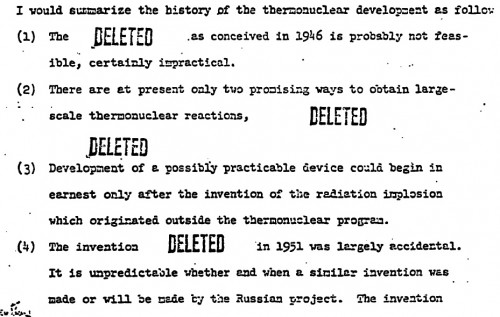

From Hans Bethe’s “Memorandum on the History of the Thermonuclear Program” (1952), which features some really provocative DELETED stamps. A minimally-redacted version assembled from many differently redacted copies by Chuck Hansen is available here.

From a security perspective, it’s actually rather generous. The redactor is often giving us the context of the secret, the length of the material kept from us (a word? a sentence? a paragraph? a page?), and helpfully drawing our eye to the parts of the document that still contain juicy bits. The Onion’s spoof from a few years back, “CIA Realizes It’s Been Using Black Highlighters All These Years,” is only slightly off from the real truth. Blacking something out is only a step away from highlighting its importance, and the void makes us curious. In fact, learning what was actually in there can be quite anticlimactic, just as learning how a magician does their trick (“the guy in the audience is in on the trick”).

And, of course, the way the US declassification system is set up virtually guarantees that multiple, differently-redacted copies of documents will eventually exist. Carbon copies of the same documents exist in multiple agencies, and each agency can be separately petitioned for copies of their files, and they will send them to individual reviewers, and they will each review their guides and try and interpret them. There’s very little centralization, and lots of individual discretion in interpreting the guides.

The National Security Archive recently posted an Electronic Briefing Book that was very critical of this approach. In their case, they pointed out that a given paragraph in a once-secret document that was deemed by the redactor to be completely safe in 2001 was in 2003 deemed secret again, and then, in 2007, reaffirmed safe, and then, in 2012, again secret. “There often seems little logic to redaction decisions, which depend on the whim of the individual reviewer, with no appreciation of either the passage of time or the interests of history and accountability,” writes Michael Dobbs.

This sort of thing happens all the time, of course. In the National Security Archive’s Chuck Hansen papers there are bundles of little stapled “books” he would create of multiply, differently-redacted copies of the same document. They are a fun thing to browse through, viewing four different versions of the same page, each somewhat differently hacked up.

A page from a 1951 meeting transcript of the General Advisory Committee, from the Hansen files. Animated to show how he staples three different copies together. Some documents contain five or more separate versions of each page. For closer inspections of the page, click here.

In the case of Hansen’s papers, these differences came about because he was filing Freedom of Information Act requests (or looking at the results of other’s requests) over extended periods of time to different agencies. The passage of time is important, because guides change in the meantime (usually towards making things less secret; “reclassification” is tricky). And the multiple sites means you are getting completely different redactors looking at it, often with different priorities or expertise.

Two different redactors, working with the exact same guides, can come up with very different interpretations. This is arguably inherent to any kind of classifying system, not just one for security classifications. (Taxonomy is a vicious profession.) The guides that I have seen (all historical ones, of course) are basically lists of statements and classifications. Sometimes the statements are very precise and technical, referencing specific facts or numbers. Sometimes they are incredibly broad, referencing entire fields of study. And they can vary quite a bit — sometimes they are specific technical facts, sometimes they are broad programmatic facts, sometimes they are just information about meetings that have been held. There aren’t any items that, from a distance, resemble flies, but it’s not too far off from Borges’ mythical encyclopedia.

The statements try to be clear, but if you imagine applying them to a real-life document, you can see where lots of individual discretion would come into the picture. Is fact X implied by sentence Y? Is it derivable, if paired with sentence Z? And so on. And there’s a deeper problem, too: if two redactors identify the same fact as being classified, how much of the surrounding context do they also snip out with it? Even a stray preposition can give away information, like whether the classified word is singular or plural. What starts as an apparently straightforward exercise in cutting out secrets quickly becomes a strange deconstructionist enterprise.

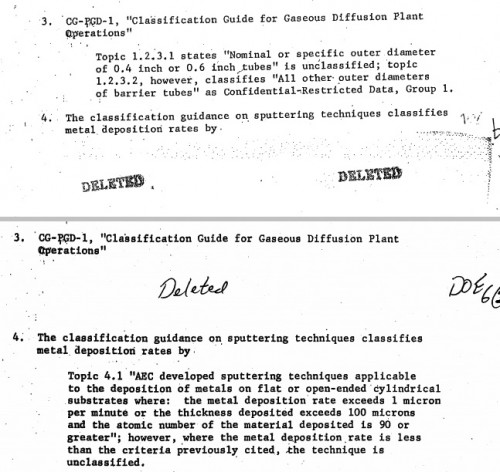

One of my favorite examples of differently redacted documents came to me through two Freedom of Information Act requests to the same agency at about the same time. Basically, two different people (I presume) at the Department of Energy looked at this document from 1970, and this was the result:

In one, the top excerpt is deemed declassified and the bottom classified. In the other, the reverse. Put them together, and you have it all. (While I’m at it, I’ll also just add that a lot of classified technical data looks more or less like the above: completely opaque if you aren’t a specialist. That doesn’t mean it isn’t important to somebody, of course. It is one of the reasons I am resistant to any calls for “common sense” classification, because I think we are well beyond the “common” here.) In this case, the irony is double, because what they’re de/classifying are excerpts from classification guides… very meta, no? 1

What’s going on here? Did the redactors really interpret their guidelines in exactly the opposite ways? Or are both of these borderline cases where discretion was required? Or was it just an accident? Any of these could be plausible explanations, though I suspect they are each borderline cases and their juxtaposition is just a coincidence. I don’t actually see this as a symptom of dysfunction, though. I see it as a natural result of the kind of declassification system we have. It’s the function, not the dysfunction — it’s just that the function is set up to have these kinds of results.

The idea that you can slot all knowledge into neat little categories that perfectly overlap with our security concerns is already a problematic one, as Peter Galison has argued. Galison’s argument is that security classification systems assume that knowledge is “atomic,” which is to say, comes in discrete bundles that can be disconnected from other knowledge (read “atomic” like “atomic theory” and not “atomic bomb”). The study of knowledge (either from first principles or historically) shows exactly the opposite — knowledge is constituted by sending out lots of little tendrils to other bits of knowledge, and knowledge of the natural world is necessarily interconnected. If you know a little bit about one thing you often know a little bit about everything similar to it.

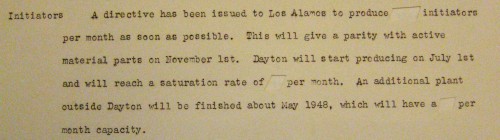

For this archive copy of a 1947 meeting of the General Advisory Committee, all of the raw numbers were cut out with X-Acto knives. Somewhere, one hopes, is an un-mutilated version. In some cases, numbers like these were initially omitted in drawing up the original documents, and a separate sheet of numbers would be kept in a safe, to be produced only when necessary.

This is a good philosophical point, one that arguably is a lot stronger for scientific facts than many others (the number of initiators, for example, is a lot less easily connected to other facts than is, say, the chemistry of plutonium), but I would just add that layered on top of this is the practical problem of trying to get multiple human beings to agree on the implementations of these classifications. That is, the classification are already problematic, and now you’re trying to get people to interpret them uniformly? Impossible… unless you opt for maximum conservatism and a minimum of discretion. Which isn’t what anybody is calling for.

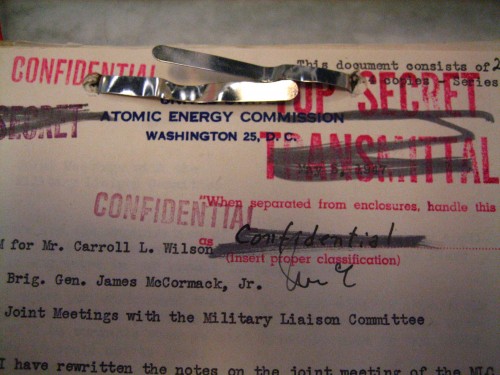

In theory, you can read the classification history of a document from all of its messy stamps and scribblings. They aren’t just for show; they tell you what it’s been through, and how to regard it now.

Declassification can be arbitrary, or at least appear arbitrary to those of us locked outside of the process. (It is one of the symptoms of secrecy that the logic of the redactor is itself usually secret.) But to me, the real sin of our current system is the lack of resources put towards it, which makes the whole thing run slow and leads to huge backlogs. When the system is running at a swift pace, you can at least know what it is they’re holding back from you, compare it to other sources, file appeals, draw attention to it, and so on. When it takes years to start processing requests (as is the case with the National Archives, in my experience; it varies a lot by agency), much less actually declassify them, there is a real impediment to research and public knowledge. I’d rather declassification be arbitrary and fast than conservative and slow.

That individual redactors individually interpreting the guidelines according to the standards they are told to use come up with different results doesn’t bother me as much. There is going to be a certain amount of error in any large system, especially one that deals with borderline cases and allows individual discretion. Sometimes you win, sometimes you lose, but it’s being able to play the game in the first place that matters the most to me.

- The document is a discussion of instances in which classification guidelines are based on strict numerical limits, as opposed to general concepts. Citation is: Murray L. Nash to Theos Thomson (3 November 1970), “AEC Classification Guidance Based on Numerical Limits,” part of SECY-625, Department of Energy Archives, RG 326, Collection 6 Secretariat, Box 7832, Folder 6, “O&M 7 Laser Classification Panel. The top was received as the response to a FOIA request I made in 2008, the bottom another one in 2010. Both were part of FOIA requests relating to declassification decisions relating to inertial confinement fusion; the memo in question was part of information given to a panel of scientists regarding creating new fusion classification guidelines.[↩]

Supposing a scholar were to spend years studying the classification of nuclear secrets, could he offer advice to those whose job today is deciding which stuff to stamp SECRET?

How could classification be made better in the future?

(I often see discussions of how declassification could be made better.)

It’s something I’ve thought about, obviously. The issues are many, and I’m also not 100% up on what the current practices are for declassification. (E.g. Have they put all of the declassification decisions into a big database, as opposed to printed guides? It seems like an obvious thing to do but I haven’t heard of it being done, but that doesn’t mean anything.)

Broader programmatic suggestions are easier to make. E.g. Rushes to declassify a lot of material (whether in the name of openness or industrialization or whatever) always lead to inadvertent errors in releases; discovery of inadvertent errors in releases always lead to clampdowns. Again, somewhat obvious, but this sort of thing has repeated itself many times.

Broadly speaking, I’m not sure there are any “easy fixes” to the current system. The volume of classified material is ridiculously large, and sorting out the stupid classifications from the serious ones is not an easy job, even for people who really would prefer very little to be classified. Barring some kind of blanket policy (e.g. everything older than 50 years is declassified no matter what), every option is painful, slow, and piecemeal, and may not result in a saner system than currently exists.

I was once given a redacted business document where the characters in names had been replaced with upper case ‘X’ characters, preserving the first and last name lengths.

Oops.

Hello, I was wondering if you’ve ever come across any studies that examine what happens if a one-megaton thermonuclear weapon is set off directly above a nuclear reactor or a pile of fuel rods? Can this lead to fission of the uranium-238 in the reactor by deuterium-tritium fusion neutrons? That is, would a one-megaton weapon set off in such circumstances possibly have an effect like a much larger Tsar Bomba-type weapon, or to put it another way, could the reactor serve as a third stage? If so, then NUKEMAP could be modified to include this effect – if it even exists, I have no idea, but it seems possible, and you would think that some think-tank group or other would have been asked to look into the possibility.

I’ve never heard of such a study, though I wouldn’t be surprised if there were studies on the effects of nuclear weapons on nuclear power plants. It’s the sort of thing a Civil Defense expert might be concerned with.

My initial thought is that the neutrons from the first bomb would be blocked by all of the various substances between it and the core, though. Reactor cores are basically smothered in neutron reflectors, absorbers, and moderators. So I’m not seeing it as super likely that very many of the high-energy neutrons from the 1-MT bomb would actually even reach the U-238 at the energies needed to produce fission. To me, the more likely scenario is breach of containment and a much worse fallout problem. But I’m no scientist.

There is a chapter in /Strategic Nuclear Targeting/, edited by Desmond Ball and Jeffrey Richelson, that is directly relevant to this question. It is ‘Targeting Nuclear Energy’ by Bennett Ramberg. It refers in the text and footnotes to a number of other more technical studies that could be of interest to you.

The expurgated version, is that there are a number of ways that nuclear weapons and nuclear power plants could ‘interface.’ These include blast effects that could upset a plant’s cooling system while leaving the containment vessel intact, or possibly EMP, as well as a ‘direct hit’ or something close to it, that would breach the containment vessel.

If the containment vessel was breached, the radioactive content of the reactor core would be added to the radioactivity caused by the nuclear detonation. The result would be much higher radiation levels in the area around the plant/detonation and downwind, and consequently a larger land area that would be rendered uninhabitable. Moreover, post-war cleanup/recovery would be complicated by the fact that radiation from inside the reactor core is relatively longer lived than the radiation generated by a nuclear weapon.

There’s a table on page 257 that is very useful. A snippet of it says that a 1MT weapon would leave 1,200 square miles of land uninhabitable for 1 year, and the meltdown of a 1000MWE reactor would leave 900 square miles uninhabitable for the same time period. By way of comparison, using a 1MT weapon on a 1000MWE reactor would leave 25,000 square miles of land uninhabitable for 1 year.

So in short, according to Ramberg using a nuclear weapon on a nuclear reactor wouldn’t cause a bigger explosion, just as Alex suggested, but it would absolutely cause a bigger radiation release.

Well, that’s cheery!

As an absolute non-expert (but I do read Richard Rhodes!) … this scenario seems enormously implausible. The fissioning of ordinary uranium requires very specific conditions, one of which is geometry: the usual separation is not more than a metre or two, I think. Also, other materials are included in the structure as reflectors etc.

And of course, a reactor has a pressure vessel lid that is thick and dense; in most countries, the reactor is also enclosed in an enormously massive reinforced concrete containment structure. The big flux of neutrons is EXTREMELY brief, so a multi-stage design must be able to exploit them within millionths of a second.

There is a real concern, however — radioactive contamination from reactors is (in some ways) vastly more dangerous than fallout from a bomb. So a “direct hit” on a reactor facility might magnify the long-term health risks quite a lot. Even if the structure were strong enough to protect the reactor core itself (and we are talking about a very strong structure indeed), hellishly dangerous spent fuel is often stored in much weaker adjacent structures.

The redactor doesn’t know what context their released information is going to be used in. Information that is perfectly innocuous if the reader only knows one set of facts is terribly dangerous if the reader knows a different set. (think of the classified-sphere example). So if you don’t just keep everything classified, you have to make guesses…

[…] The Problem of Redaction — Hah. Interesting security wonk stuff. This seems right up autopope‘s alley. (Thanks to seventorches.) […]

[…] Snip, snip… and then it’s gone. […]