One of the really salient issues about nuclear weapons is that they are expensive. There’s just no way to really do them on the cheap: even in an extremely optimized nuclear weapons program, one that uses lots of dual-use technology bought off-the-shelf, to make a nuclear weapon you need some serious infrastructure.

A piece of gold the weight of Little Boy would have cost between $5 and $6 million in 1945. The fissile material for Little Boy cost well over $1 billion. So it would actually have been a pretty good bargain at the time if Little Boy had cost its weight in gold. Also, I knew that making a highly-realistic model of Little Boy in Blender would come in handy someday.

That’s not to say that you need to redundantly overspend as much as the Manhattan Project did, or the US did during the Cold War, but even “cheap” nuclear weapons programs are pretty costly. There are a few multinational corporations that could probably pull it off if they were given carte blanche with the technology, but basically you’re talking about a weapon that is made for, and by, states. (I’m not, of course, ignoring the possibility of hijacking someone else’s infrastructural investments, which is another way to think about theft of fissile material.)

Solid gold B61s aside, this is a good thing. It actually makes nuclear weapons somewhat easy to regulate. I know, I know — the history of trying to control the bomb isn’t usually cited as one of the great successes of our time, but think about how much harder it would be if you couldn’t spot bomb factories? If every university physics department could build one? If they were really something you could do, from scratch, in an old airstream trailer?

Herman Kahn, the great thinker of unthinkable thoughts, has a bit about the relationship between cost and doomsday in his 1965 book On Escalation, which a someone in the audience of a talk I gave last month helpfully sent along to me:1

Assume that it were possible to manufacture a “doomsday machine” from approximately $10 worth of available materials. While it might be “unthinkable” that the world would be destroyed by such a “doomsday machine,” it would also be almost inevitable. The only question would be: Is it a matter of minutes, hours, days, months, or years? About the only conceivable way of preventing such an outcome would be the imposition of a complete monopoly upon the relevant knowledge by some sort of disciplined absolutist power elite; and even then one doubts that the system would last.2

If the price of the “doomsday machine” went up to a few thousand, or hundreds of thousands, of dollars, this estimate would not really be changed. There are still enough determined men in the world willing to play games of power blackmail, and enough psychopaths with access to substantial resources, to make the situation hopeless.

If, however, the cost of “doomsday machines” were several millions or tens of millions of dollars, the situation would change greatly. The number of people or organizations having access to such sums of money is presently relatively limited. But the world’s prospects, while no longer measured by the hour hand of a clock, would still be very dark. The situation would improve by an order of magnitude if the cost went up by another factor of 10 to 100.

It has been estimated that “doomsday” devices could be built today for something between $10 billion and $100 billion. [Multiply that by 10 for roughly current price in USD]3 At this price, there is a rather strong belief among many, and perhaps a reasonably well-founded one, that the technological possibility of “doomsday machines” is not likely to affect international relations directly. The lack of access to such resources by any but the largest nations, and the spectacular character of the project, make it unlikely that a “doomsday machine” would be built in advance of a crisis; and fortunately, even with a practical tension-mobilization base, such a device could not be improvised during a crisis.

In other words, since Doomsday Machines are phenomenally expensive, and thus only open as options to states with serious cash to spend (and probably serious existing infrastructures), the odds of them being built, much less used, are pretty much nil. Hooray for us! (Nobody tell Edward.) But as you slide down the scale of cheapness, you slide into the area of likelihood — if not inevitability — given how many genuinely bad or disturbed people there are in the world.

Cost and control go hand-in-hand. Things that are cheap (both in terms of hard cash as well as opportunity cost, potential risk of getting caught, and so on) are more likely to happen, things that are expensive are not. The analogy to nuclear weapons in general is pretty obvious and no-doubt deliberate. Thank goodness H-bombs are expensive in every way. Too bad that guns are not, at least in my country.

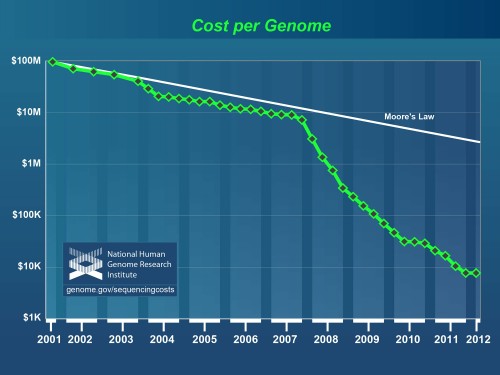

But area where I start really thinking about this is biology. Check out this graph:

Cost of sequencing a human-sized genome, 2001-2012. From the National Human Genome Research Institute.

This graph is a log chart of the cost of sequencing an entire human genome, plotted over the last decade or so. Moore’s Law is plotted in white — and from 2001 through the end of 2007, the lines roughly match. But at the beginning of 2008, sequencing genomes got cheap. Really cheap. Over the course of four years, the cost dropped from around $10 million to about $10,000. That’s three orders of magnitude. That’s bananas.

I was already reeling at this graph when I saw that Kathleen Vogel has a very similar chart for DNA synthesis in her just-published book, Phantom Menace or Looming Danger?: A New Framework for Assessing Bioweapons Threats (John Hopkins University Press, 2012). (I haven’t had a chance to read Kathleen’s book yet, but flipping through it, it is pretty fascinating — if you are interested in WMD-related issues, it is worth picking up.)

Everything regarding the reading and writing of DNA is getting phenomenally cheap, really quickly. There’s been a blink-and-you-missed-it biological revolution over the last five years. It’s been caused by a relatively small number of commercial players who have made DNA sequencing into an automated, computer-driven, cheap process.4 It will probably hit some kind of floor — real-world exponential processes eventually do — but still.

I don’t have anything much against DNA sequencing getting cheap. (There are, of course, implications for this, but none that threaten to destroy the world.) DNA synthesis makes me pause — it is not a huge step from DNA synthesis to virus synthesis, and from there to other bad ideas. But as Kathleen emphasizes in her book (and in talks I’ve seen her give), it’s not quite as easy as the newspapers make out. For now. We’re still probably a few decades away from your average med school student being able to cook up biological weapons, much less biological Doomsday Machines, in a standard university research laboratory. But we’re heading down that road with what seems to me to be alarming speed.

Don’t get me wrong — I think the promises of a cheap revolution in biology are pretty awesome, too. I’d like to see cancer kicked as much, and maybe even more, than the next guy. I’m not anti-biology, or anti-science, and I’m not in fan of letting a purely security-oriented mindset dominate how we make choices, as a society. I don’t necessarily think secrecy is the answer when it comes to trying to control biology — it didn’t really work with the bomb very well, in any case. But I do think the evangelists of the new biology should treat these sorts of concerns with more than a knee-jerk, “you can’t stop progress” response. I’m all in favor of big breakthroughs in medicine and biology, but I just hope we don’t get ourselves into a world of trouble by being dumb about prudent regulation.

What disturbs me the most about this stuff is that compared to the best promises and worst fears of the new biology, nuclear weapons look easy to control. The bomb was the easy case. Let’s hope that the next few decades don’t give us such a revolution in biology that we inadvertently allow for the creation of Doomsday Machines on the cheap.

- Herman Kahn, On Escalation: Metaphors and Scenarios (Transaction Publishers, 2010 [1965]), 227-228. [↩]

- Note the implicit connection here between knowledge and the importance of cheapness — when materials are cheap, knowledge becomes everything. Or, to put it another way, this is why computer viruses are everywhere and atomic bombs are not. [↩]

- Here he cites his own On Thermonuclear War, page 175, but in the copy I have, it is page 145, footnote 2: “While I would not care to guess the exact form that a reasonably efficient Doomsday Machine would take, I would be willing to conjecture that if the project were started today [1960] and sufficiently well supported one could have such a machine by 1970. I would also guess that the cost would be between 10 and 100 billion dollars.” $10 billion USD in 1960, depending on the conversion metric you use, is something in the neighborhood of 100 billion dollars today, with inflation. [↩]

- I thank my friend Hallam Stevens for cluing me in on this. His work is really must-read if you want to know about the computerized automation of sequencing work. [↩]

This is an excellent comparison, and your observation that the bomb was the easy case is sobering and apt. A huge difference, of course, between the nuclear case and the biotech case is that in the 1950s there was no “plutonium valley” full of startups competing to bring the cost of nuclear weapons under $1000, so that we could all stock our bomb shelters. Where the nuclear case took place in a climate of support for government regulation, the biotech revolution is happening in the age of the Infallible Free Market. I.e., today, lack of control is *built into the system.*

With the atomic bomb, this was quite explicit: it was methodically and consciously removed from the free market. As one of the drafters of the Atomic Energy Act put it (famously), “the field of atomic energy is made an island of socialism in the midst of a free-enterprise economy.” This was loosened up in the 1950s the stimulate the nuclear power industry, but there are still many monopolistic aspects still on the books.

Alex-

Interesting post – I did a pilot project with Cyrus Mody a few years back on “high tech intellectuals” and their fascination with exponentiality of all stripes. Of course, one can’t throw an iPhone at a TED meeting without someone showing some sort of exponential-based curve. Your post reminds me of the people who made dire predictions about nuclear weapons before 1945…and those who are making similar claims about DNA synthesis work now (the recent Atlantic piece about hacking the President’s DNA, etc.). One of the papers I’m working on now is how there is this eschatalogical trope that comes with almost all emerging technologies.

P.

In meditating on the “almost all emerging technologies,” I thought, “I wonder if anyone did this for coal, back in the day?” Probably not — there were hazards, of course, but even the worst complaints about railroads weren’t proposing total annihilation. And so there’s the irony: it may be carbon-based industrialization that does us in (to some extent), rather than anything more exotic. I’m more worried about unchecked climate change than I am the possibilities of full nuclear doomsday.

Two banal comments:

Guns are cheap, and we have Sandy Hook, Mumbai, etc. If the perpetrators could kill more in spectacular fashion, they surely would.

Viral DNA shouldn’t be hard to make if you’re careful and patient. Packaging might take more effort, but I’ve never studied that. Source: http://oai.dtic.mil/oai/oai?verb=getRecord&metadataPrefix=html&identifier=ADA409078

Building viruses from scratch has been done. Kathleen Vogel talks in depth in her book about the case from just a few years back where scientists took computerized representations of DNA and were able to make them into living polio viruses. But it’s still not easy. It doesn’t require a lot of physical money, but it does require being an expert in virology who has worked on similar problems for the last decade or so. That’s a pretty big opportunity cost, aside from the problems of practical weaponization. But the bar for these things is steadily lowering, and the most ambitious proposals by the evangelists of synthetic biology would more or less have the bar disappear altogether. This disturbs me.

I wonder now if you noticed the author of the report I cited…

Ha! I didn’t, but now I did. Well then!

The report itself looks largely theoretical, no? Correct me if I’m wrong. What’s interesting to me about the things Vogel has looked at is how they reduce to practice, and what difficulties there are in reducing them to practice.

It was theoretical: IDA doesn’t do lab work as a rule (fortunately, neither do most mathematicians).

Packaging and delivery both seem considerably harder to do because AFAIK (I’m ten years removed from all things biological) those processes are much further from leveraging COTS technology.

There will probably be other, better executed Aum Shinrikyo type events in the future though.

Well, that’s… depressing.

That’s a pretty big opportunity cost, aside from the problems of practical weaponization.

What’s the difficulty in weaponizing a contagious disease? You just need volunteers. Failing that it should be easy enough to find unwilling carriers. If someone could recreate small-pox, for example, a few volunteers and plane tickets to take them to the busiest airports in the world is all that’s needed for a global pandemic.

Soviet officials were aware of the potential for biological weapons to be the poor man’s atom bomb. In The Dead Hand, I describe the handwritten minutes taken at a Kremlin meeting to discuss BW in July 1989. The notetaker wrote:

Per 1

conventional 2,000 doll.

Nuclear 800 ”

Chem 60 ”

Bio 1 ”

The unit of measurement is not stated, but apparently was dollars.

All that said, I think advances in computational biology and sequencing seem to be enormous, and so far there is no sign of malevolent use. Not that it cannot happen or will not. But the gains are huge.

David, thank you for this. What an odd note. I’m puzzling over what the values could mean — is it dollars per death?

I don’t think we should halt the technology or the science — but I do think we should think very hard about anything that will decrease the difficulty of doing Very Bad Things. This doesn’t mean trying to strangle a science in the cradle; it just means taking a serious approach to regulation before there’s a problem. (Which, ironically, is exactly what Edward Teller advocated regarding nuclear power, in the early days: the best thing for the growth of nuclear power was to keep it from anything close to an accident, since you’d only need one big one for the public to turn against it. He wasn’t entirely wrong, there.)

A plague takes time to disperse so there is the possibility that governments will take the threat seriously someday and have labs ready to synthesize some sort of vaccine using the same biotechnology but with a very large and efficient infrastructure. Countries would have to close off their borders in the mean time. Very disruptive for sure. Dispersal would be more efficient using ICBMs but that puts the cost beyond crazy individuals who aren’t in control of governments. But I suppose lots of people could position themselves all over the world and release their deadly plague simultaneously. Yeah, we’re effed.

Hi again. It occurs to me that the more people involved in an undertaking, the more difficult it is to keep it a secret which reduces the likelihood of it being carried out without being intercepted by the anti-terrorism agencies of the world. So perhaps the scenario that I previously described isn’t inevitable. Whew!

I don’t think it necessarily has to involve many people — consider a 12 Monkeys-like scenario, where a very virulent plague is released by a single individual at a major international airport hub (say, San Francisco or JFK). If engineered to be hard to detect (e.g. a long incubation/latency period where it would be spread further but not detected) it could easily mean millions of people infected — far worse than anything else that’s on the table for a single individual at the moment.

Or, to put it another way: I don’t think of myself as particularly clever when it comes to thinking up Very Bad Things to do with custom-made biology. I’ve certainly met people who were cleverer — the sort of folks who have what Bruce Schneier calls the security mindset. But it’s easy — in the absence of hard data on what can and can’t be do with the biology of the next few decades of course — to come up with scenarios where vast numbers of people are really hurt by the actions of a single individual, if the best hopes of the synthetic biology come to pass, and if the technology is entirely unregulated. So that’s why I’m disturbed, especially when the biologists have taken a largely “let us self-regulate, don’t stop science” approach to talking about possible future security threats.

The fewer terrorists involved, the longer the latency period required to allow time for everyone on earth to be infected (otherwise it wouldn’t be a doomsday scenario). Suppose a latency period of a year is required, that means when the first people infected start dying, the last people infected would have a year to come up with a cure. Though I guess the bad guys could engineer a virus with shorter latency periods with each successive generation (via some kind of programmed mutation) so that the last people don’t have enough time. A tricky kind of virus to engineer though. My argument is getting more absurd with each sentence so I will sign off. Enjoyed your post.

Well, sure, a true “everybody at once” doomsday would be very hard to engineer. It may even be impossible if you were trying to get all of the hermits and isolated tribes and whatnot. But you don’t have to scale down your ambitions much to cause a lot of trouble, trouble which would itself potentially spiral into other issues (imagine the international situation if you had something like the Spanish flu break out — 500 million infected in 1918, 1-3% of the world population dead — and it was clear that it was caused by someone with an agenda).

I’m pretty sure studies have been done on how much of a population has to be bed-ridden and in need of care from others before societies start breaking down. I seem to think the number was relatively small, like ten percent. But that may have been a calculation based on older societies without the production capacity of current first world nations, so the old number (whatever it was) may not apply.

But imagine being able to synthesize a strain of small pox. That’s very nasty all by itself, no real need to weaponize it. Imagine being able to synthesize several strains, each with enough variance one from another that a vaccine for one may not work as a vaccine for another, as with flu strains. Assume a relatively small number of people are needed for the production. (Obviously, though, they need to be the right people.) Not everyone involved needs to know what is actually happening, either. No reason to tell the janitor what’s going on.

Once you’ve got several strains ready to go you just need a few volunteers. You can’t tell me that certain societies don’t produce such people. Plus, even if they know they’re in for excruciating death, they can always kill themselves once they’ve spread the disease to a certain degree.

Then unleash the strains one after the other. A few weeks for the first strain to take hold (via infected volunteers going to well populated places with lots of traffic: airports, first-world subway systems, etc.), then send out the second wave, then the third. By the time the second wave becomes apparent you might not get the chance to send out the third wave, as international travel would be shut down.

Heck, do them in barely intersecting waves and you may get even more bang for the buck. First wave east Asia and the Pacific Rim, Second wave Russia and Europe. Etc.

You don’t have to kill everyone to get societal collapse.

So grim, so grim! I hate to agree with Herman Kahn, whose best contribution was to inspire a second-rate metal band with his “megadeaths,” but it does seem that in the long game, most of the WMDs are inevitable, and cheaper with time. I wonder about another “when”, namely when international control regimes will break down due to complete lack of credibility.

Allex:

A powerful meditation.

MK

As a biologist, I feel I must point out at least one other major difference between nukes and viruses.

Nukes are expensive, yes, but they are also incredibly hard to stop once launched. In fact, it is even more expensive to stop a nuke than it is to launch one. This is one of the underpinnings of MAD, after all: that any defence can be countered, any target saturated.

Biology, on the other hand, is easier to defend against. Just as it is becoming easier to synthesize a virus, so it is becoming easier to synthesize a vaccine. Furthermore, there are limits involved which nukes do not have to deal with: our long fight with biological interlopers has made our own defences pretty sharp (to the point that some avenues of attack are effectively cut off) and, as biological entities in their own right, viruses and bacteria are subject to hard limits and compromises in terms of their virulence, spread and so on.

Biological weapons may become a large threat, it is true (especially the more cunning, nasty stuff we could do with, say, wasps – who are like the all-purpose terrible bastards of nature). But they will remain just that: a weapon, not a doomsday device.

But reactive response (coming up with a vaccine) requires time, both to develop and to deliver. The possibilities for offensive biology seem to me, a non-expert, to vastly out-strip the defensive capabilities. It’s very easy to imagine (again, in the absence of hard data about the real capabilities here) viruses that spread widely, stay latent for awhile, then suddenly enact their negative effects. One might not worry as much about “true Doomsday,” whatever that means, but in terms of human suffering the response could be very bad indeed, it strikes me.

Or to put it another way: if the worst that can happen is something that looks like pandemics in recent human history (say, the Spanish Flu of 1918), that is pretty bad, by any standards. But I find even assuming that such a thing would be the worst possible outcome is probably optimistic.

I, on the other hand, take that outcome as pessimistic.

This is because, as we study the great pandemics of history on a molecular level, we tend to find that the pathogens themselves aren’t particularly unusual.

Black plague, for instance, is pretty much the same now as it was then. What has changed is that there isn’t a reservoir population of unexposed, chronically malnourished people who could incubate the disease in terrible sanitary conditions before passing it on.

Even in the most stereotypically hellish third-world scenarios, medicine has advanced to the point that such outbreaks can be contained and their treated.

Similarly, the Spanish flu (while very virulent) was not that different from swine flu on a genetic level. The major change between then and now is that we haven’t had four years of total war to collapse our agricultural sector and force us onto a diet of potatoes and turnips.

Secondly, pathogens are living organisms and, as such, are subject to selection once released. More often than not this selection tends to favour longer incubation times and lower virulence, leading to attenuation of the outbreak. A good modern example of this would be AIDS, which fairly rapidly underwent attenuation from an acute disease that killed its host in a few years to a chronic disease that took decades to kill. The example is, of course, not perfect due to the introduction of ARVs and change in overall host population, but still gives an insight into the evolutionary processes involved.

As I said before: biological weapons are biological. They are constrained by a number of factors (transmission route, mode of entry, mode of replication, host defences etc.) which force restrictions on just how fast and how deadly they can be. In this, they are fundamentally different from chemical or nuclear weapons.

Furthermore, due to the afore-mentioned interaction between host and pathogen, they are also much more variable in terms of their effects. You could drop weaponised ebola onto a modern city and the result could consist of a few isolated fatalities or a nationwide pandemic, with the balance of probability falling more towards the ‘isolated’ end of the spectrum.

My point was that it takes time to develop a plague as well, with the improvements in biotech helping both sides of the equation equally.

Like I said, biotech can be used to make weapons. But I disagree that it can currently produce something that could be described as a doomsday weapon.

I like how you included a picture of Little Boy and a photo of a Fat Man.

Alex, I realise I’m already in danger of saturating this topic with blocks o’ text, but would you allow me to write a long-form reply on the state of biotech as it stands today?

Its off on a tangent, but may be useful for understanding any claims about DIY bio-terrorism, along with some general moaning about how much we don’t know/can’t do yet when it comes to actually unravelling biological systems…

[…] my post last week was such a bummer, I thought I’d do something a little more fun and trivial […]

Alex:

Reading your excellent (as usual) piece left me with this thought: the best graphics about the Bomb coincided with the best writing about the Bomb.

Best wishes for the new year,

MK

Not to be disagreeable, but the dirty little secret of nuclear weapons is how very cheap they are compared to any other means of doing so much physical destruction. That’s why the Eisenhower Administration went to “massive retaliation”. It was enormously cheaper than maintaining a standing army.

Cost was a figure in the SDI debate too. At the time the price tag for a single MX warhead ran about a million bucks (physics package) making it obvious that any defense could be saturated easily.

The initial infrastructure is costly, but production is not

pz