One of the unexpected things that popped up on my agenda this last week: I was asked to give a private talk to General Michael Hayden, the former director of the National Security Agency (1999-2005), and the Central Intelligence Agency (2006-2009). Hayden was at the Stevens Institute of Technology (where I work) giving a talk in the President’s Distinguished Lecture Series, and as with all such things, part of the schedule was to have him get a glimpse of the kinds of things we are up to at Stevens that he might find interesting.

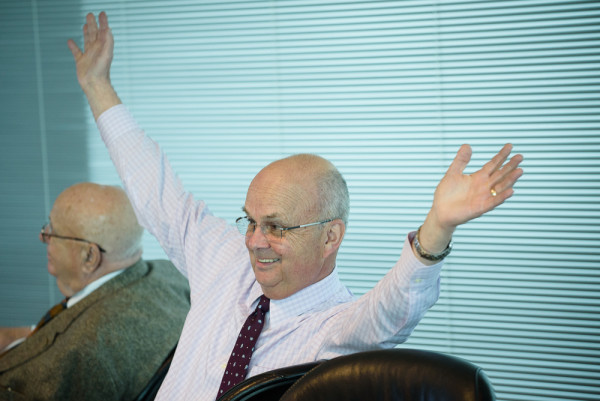

The group that met with General Michael Hayden last Wednesday. Hayden is second from left at the far side of the table. The President of Stevens, Nariman Farvardin, is nearest to the camera. I am at the table, at the back. All photos by Jeffrey Vock photography, for Stevens.

What was strange, for me, was that I was being included as one of those things. I am sure some of my readers and friends will say, “oh, of course they wanted you there,” but I am still a pretty small fry over here, an assistant professor in the humanities division of an engineering school. The other people who gave talks either ran large laboratories or departments with obvious connections to the kinds of things Hayden was doing (e.g., in part because of its proximity to the Hudson River, Stevens does a lot of very cutting-edge work in monitoring boat and aerial vehicle traffic, and its Computer Science department does a huge amount of work in cybersecurity). That a junior historian of science would be invited to sit “at the table” with the General, the President of the Institute, and a handful of other Very Important People is not at all obvious, so I was surprised and grateful for the opportunity.

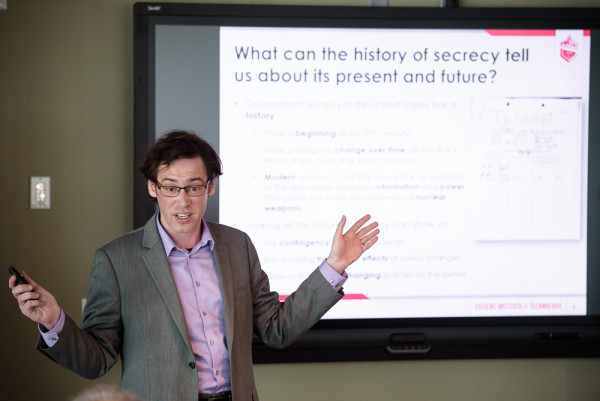

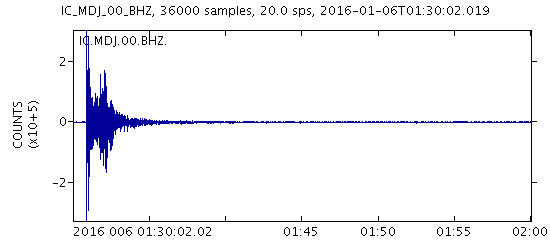

So what does the historian of secrecy say to one of the “Super Spooks,” as my colleague, the science writer (and critic of US hegemony and war) John Horgan, dubbed Hayden? I pitched two different topics to the Stevens admin — one was a talk about what the history of secrecy might tell us about the way in which secrecy should be talked about and secrecy reform should be attempted (something I’ve been thinking about and working on for some time, a policy-relevant distillation of my historical research), the other was a discussion of NUKEMAP user patterns (which countries bomb who, using a dataset of millions of virtual “detonations” from 2013-2016).1 They opted for the first one, which surprised me a little bit, since it was a lot less numbers-driven and outward-facing than the NUKEMAP talk.

Yours truly. As you will notice, there was a lot of great gesturing going on all around while I was talking. I am sure a primatologist could make something out of this.

The talk I pitched to the General covered a few distinct points. First, I felt I needed to quickly define what Science and Technology Studies (STS) was, as that is the program I was representing, and it is not extremely well-known discipline outside of academia. (The sub-head was, “AKA, Why should anyone care what a historian of science thinks about secrecy?”) Now those who practice STS know that there have been quite a few disciplinary battles of what STS is meant to be, but I gave the basic overview: STS is an interdisciplinary approach by humanists and social scientists that studies science and technology and their interactions with society. STS is sort of an umbrella-discipline that blends the history, philosophy, sociology, and anthropology of science and technology, but also is influenced, at times, by things like the study of psychology, political science, and law, among many other things. It is generally empirical (but not always), usually qualitative, but sometimes quantitative in its approach (e.g. bibliometrics, computational humanities). In short, I pitched, while lots of people have opinions about how science and technology “work” and what their relationship is with society (broadly construed), STS actually tries to apply academic rigor (of various degrees and definitions) to understanding these things.

Hayden was more receptive to the value of this than I might have guessed, but this seemed in part to be because he majored in history (for both a B.A. and M.A., Wikipedia tells me), and has clearly done a lot of reading around in political science. Personally I was pretty pleased with this, just because we historians, especially at an engineering school, often get asked what one can do with a humanities degree. Well, you can run the CIA and the NSA, how about that!

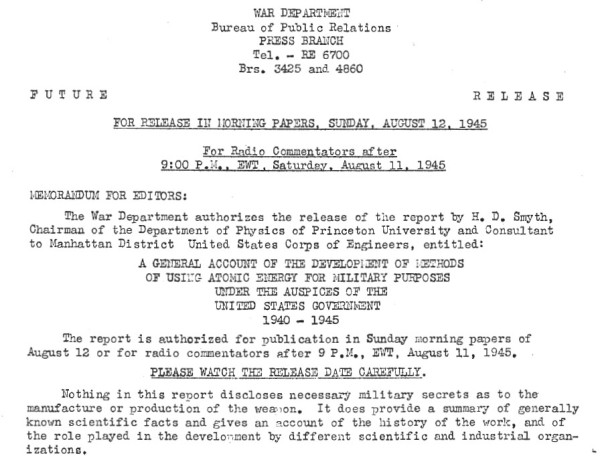

I then gave a variation on talks I have given before on the history of secrecy in the United States, and what some common misunderstandings are. First, I pointed out that there are some consequences in just acknowledging that secrecy in the US has a history at all — that it is not “transhistorical,” having existed since time immemorial. You can pin-point to beginnings of modern secrecy fairly precisely: World War I has the emergence of many trends that become common later, like the focus on “technical” secrets and the first law (the Espionage Act) that applies to civilians as well as military. World War II saw a huge, unrelenting boom of the secrecy system, with (literally) overflow amounts of background checks (the FBI had to requisition the DC Armory and turn it into a file vault), the rise of technical secrecy (e.g. secrecy of weapons designs), the creation of new classification categories (like “Top Secret,” created in 1944), and, of course, the Manhattan Project, whose implementation of secrecy was in some ways quite groundbreaking. At the end of World War II, there was a curious juncture where some approaches to classification were handled in a pre-Cold War way, where secrecy was really just a temporary situation due to ongoing hostilities, and some started to shift towards a more Cold War fashion, where secrecy became a facet of American life.

The big points are — and this is a prerequisite for buying anything else I have to say about the topic — that American secrecy is relatively new (early-to-mid 20th century forward), that it had a few definite points of beginning, that the assumption that the world was full of increasingly dangerous information that needed government regulation was not a timeless one, and that it had changed over time in a variety of distinct and important ways. In short, if you accept that our secrecy is the product of people acting in specific, contingent circumstances, it stops you from seeing secrecy as something that just “has to be” the way it is today. It has been otherwise, it could have been something else, it can be something else in the future: the appeal to contingency, in this case, is an appeal to agency, that is, the ability for human beings to modify the circumstances under which they find themselves. This is, of course, one of the classic policy-relevant “moves” by historians: to try and show that the way the world has come to be isn’t the only way it had to be, and to try and encourage a belief that we can make choices for how it ought to be going forward.

General Hayden seemed to accept all of this pretty well. I should note that throughout the talk, he interjected with thoughts and comments routinely. I appreciated this: he was definitely paying attention, to me and to the others. I am sure he has done things like this all the time, visiting a laboratory or university, being subjected to all manner of presentations, and by this point he was post-lunch, a few hours before giving his own talk. But he stayed with it, for both me and the other presenters.

The rest of my talk (which was meant to be only 15 minutes, though I think it was more towards 25 with all of the side-discussions), was framed as “Five myths about secrecy that inhibit meaningful policy discussion and reform.” I’m not normally prone to the “five myths” sort of style of talking about this (it is more Buzzfeed than academic), but for the purpose of quickly getting a few arguments across I thought it made for an OK framing device. The “myths” I laid out were as follows

Myth: Secrecy and democracy necessarily conflict. This is the one that will make some my readers blanche at first read, but my point is that there are areas of society where some forms of secrecy need to exist in order to encourage democracy in the first place, and there are places where transparency can itself be inhibiting. The General (unsurprisingly?) was very amenable to this. I then make the move that the trick is to make sure we don’t get secrecy in the areas where it does conflict with democracy. The control of information can certainly conflict with the need for public understanding (and right-to-know) that makes an Enlightenment-style democracy function properly. But we needn’t see it as an all-or-nothing thing — we “just” have to make sure the secrecy is where it ought to be (and with proper oversight), and the transparency is where it ought to be. Hayden seemed to agree with this.

Myth: Secrecy and security are synonymous. Secrecy is not the same thing as security, but they are often lumped together (both consciously and not). Secrecy is the method, security is the goal. There are times when secrecy promotes security — and there are times in which secrecy inhibits it. This, I noted, was one of the conclusions of the 9/11 Commission Report as well, that lack of information sharing had seriously crippled American law enforcement and intelligence with regards to anticipating the attacks of 2001. I also pointed out that the habitual use of secrecy led to its devaluation — that when you start stamping “TOP SECRET” on everything, it starts to mean a lot less. The General strongly agreed with this. He also alluded to the fact that nobody ought to be storing any kind of government e-mails on private servers thees days, because the system was so complicated that literally nobody ever knew if they were going to be generating classified information or not — and that this is a problem.

I also noted that an impression, true or not, that secrecy was being rampantly misapplied had historically had tremendously negative affects on public confidence in governance, which can lead to all sorts of difficulties for those tasked with said governance. Hayden took to this point specifically, thought it was important, and brought up an example. He said that the US compromise of the 1970s was to get Congressional “buy-in” to any Executive or federal classified programs through oversight committees. He argued that the US, in this sense, was much more progressive with regards to oversight than many European security agencies, who essentially operate exclusively under the purview of the Executive. He said that he thought the NSA had done a great job of getting everything cleared by Congress, of making a public case for doing what it did. But, he acknowledged that clearly this effort had failed — the public did not have a lot of confidence that the NSA was being properly seen over, or that its actions were justified. He viewed this as a major problem for the future, how US intelligence agencies will operate within the expectations of the American people. I seem to recall him saying (I am reporting this from memory) that this was just part of the reality that US intelligence and law enforcement had to learn to live with — that it might hamper them in some ways, but it was a requirement for success in the American context.

Myth: Secrecy is a wall. This is a little, small intervention I made in terms of the metaphors of secrecy. We talk about it as walls, as cloaks, and curtains. The secrecy-is-a-barrier metaphor is perhaps the most common (and gets paired a lot with information-is-a-liquid, e.g. leaks and flows), and, if I can channel the thesis of the class I took with George Lakoff a long time ago, metaphors matter. There is not a lot you can do with a wall other than tolerate it, tear it down, find a way around, etc. I argued here that secrecy definitely feels like a wall when you are on the other “side” of it — but it is not one. If it was one, it would be useless for human beings (the only building made of nothing but walls is a tomb). Secrecy is more like a series of doors. (Doors, in turn, are really just temporary walls. Whoa.) Doors act like walls if you can’t open them. But they can be opened — sometimes by some people (those with keys, if they are locked), sometimes by all people (if they are unlocked and public). Secrecy systems shift and change over time. Who has access to the doors changes as well, sometimes over time. This comes back to the contingency issue again, but also refocuses our attention less on the fact secrecy itself but how it is used, when access is granted versus withheld, and so on. As a historian, my job is largely to go through the doors of the past that used to be locked, but are now open for the researcher.

Myth: Secrecy is monolithic. That is, “Secrecy” is one thing. You have it or you don’t. As you can see from the above, I don’t agree with this approach. It makes government secrecy about us-versus-them (when in principle “they” are representatives of “us”), it makes it seem like secrecy reform is the act of “getting rid of” secrecy. It make secrecy an all-or-nothing proposition. This is my big, overarching point on secrecy: it isn’t one thing. Secrecy is itself a metaphor; it derives from the Latin secerno: to separate, part, sunder; to distinguish; to set aside. It is about dividing the world into categories of people, information, places, things. This is what “classification” is about and what it means: you are “classifying” some aspects of the world as being only accessible to some people of the world. The metaphor doesn’t become a reality, though, without practices (and here I borrow from anthropology). Practices are the human activities that make the idea or goal of secrecy real in the world. Focus on the practices, and you get at the heart of what makes a secrecy regime tick, you see what “secrecy” means at any given point in time.

And, per my earlier emphasis on history, this is vital: looking at the history of secrecy, we can see the practices move and shift over time, some coming into existence at specific points for specific reasons (see, e.g., my history of secret atomic patenting practices during World War II), some going away over times, some getting changed or amplified (e.g., Groves’ amplification of compartmentalization during the Manhattan Project — the idea preceded Groves, but he was the one who really imposed it on an unprecedented scale). We also find that some practices are the ones that really screw up democratic deliberation, and some of them are the ones we think of as truly heinous (like the FBI’s COINTELPRO program). But some are relatively benign. Focusing on the practices gives us something to target for reform, something other than saying that we need “less” secrecy. We can enumerate and historicize the practices (I have identified at least four core practices that seem to be at the heart of any secrecy regime, whether making an atomic bomb or a fraternity’s initiation rites, but for the Manhattan Project there were dozens of discrete practices that were employed to try and protect the secrecy of the work). We can also identify which practices are counterproductive, which ones fail to work, which ones produce unintended consequences. A practice-based approach to secrecy, I argue, is the key to transforming our desires for reform into actionable results.

Myth: The answer to secrecy reform is balance. A personal pet peeve of mine are appeals to “balance” — we need a “balance of secrecy and transparency/openness/democracy,” what have you. It sounds nice. In fact, it sounds so nice that literally nobody will disagree with it. The fact that the ACLU and the NSA can both agree that we need to have balance is, I think, evidence that it means nothing at all, that it is a statement with no consequences. (Hayden seemed to find this pretty amusing.) The balance argument commits many the sins I’ve already enumerated. It assumes secrecy (and openness) are monolithic entities. It assumes you can get some kind of “mix” of these pure states (but nobody can articulate what that would look like). It encourages all-or-nothing thinking about secrecy if you are a reformer. Again, the antidote for this approach is a focus on practices and domains: we need practices of secrecy and openness in different domains in American life, and focusing on the effects of these practices (or their lack of existence) gives us actionable steps forward.

I should say explicitly: I am not an activist in any way, and my personal politics are, I like to think, rather nuanced and subtle. I am sure one can read a lot of “party lines” into the above positions if one wants to, but I generally don’t mesh well with any strong positions. I am a historian and an academic — I do a lot of work trying to see the positions of all sides of a debate, and it rubs off on me that people of all positions can make reasonable arguments, and that there are likely no simple solutions. That being said, I don’t think the current system of secrecy works very well, either from the position of American liberty or the position of American security. As I think I make clear above, I don’t accept the idea that these are contradictory goals.

Hayden seemed to take my points well and largely agree with them. In the discussion afterwards, some specific examples were brought up. I was surprised to hear (and he said it later in his talk, so I don’t think this is a private opinion) that he sided with Apple in the recent case regarding the FBI and “cracking” the iPhone’s security. He felt that while the legal and Constitutional issues probably sat in the FBI’s camp, he thought the practice of it was a bad idea: the security compromise for all iPhones would be too great to be worth it. He didn’t buy the argument that you could just do it once, or that it would stay secret once it was done. I thought this was a surprising position for him to take.

In general, Hayden seemed to agree that 1. the classification system as it exists was not working efficiently or effectively, 2. that over-classification was a real problem and led to many of the huge issues we currently have with it (he called the Snowden leaks “an effect and not a cause”), 3. that people in the government are going to have to understand that the “price of doing business” in the United States was accepting that you would have to make compromises in what you could know and what you could do, on account of the needs of our democracy.

Hayden’s last slide: “Buckle up: It’s going to be a tough century.” Though I know he’d agree that the last one was no walk in the park, either…

Hayden then went and gave a very well-attended talk followed by a Q&A session. I live-Tweeted the whole thing; I have compiled my tweets into a Storify, if you want to get the gist of what he said. He is also selling a new book, which I suspect has many of these same points in it.

My concluding thoughts: I don’t agree with a lot of Hayden’s positions and actions. I am a lot less confident than he is that the NSA’s work with Congress, for example, constitutes appropriate oversight (it is plainly clear that Congressional committees can be “captured” by the agencies they oversee, and with regards to the NSA in particular, there seems to have been some pretty explicit deception involved in recent years). I am not at all confident that drone strikes do a net good in the regions in which we employ them. I am deeply troubled by things like extraordinary rendition, Guantanamo Bay, water boarding, and anything that shades towards torture, a lack of adherence towards laws of war, or a lack of adherence towards the basic civil liberties that our Constitution articulates as the American idea. Just to put my views on the table. (And to make it clear, I don’t necessarily think there are “simple” solutions to the problems of the world, the Middle East, to America. But I am deeply, inherently suspicious that the answer to any of them involves doing things that are so deeply oppositional to these basic American military and Constitutional values.)

But, then again, I’d never be put in charge of the NSA or the CIA, either, and there’s likely nobody who would ever be put in charge of said organizations that I would agree with on all fronts. What I did respect about Hayden is that he was willing to engage. He didn’t really shirk from questions. He also didn’t take the position that everything that the government has done, or is doing, is golden. But most important, for me, was that he took some rather nuanced positions on some tough issues. The core of what I heard him say repeatedly was that the Hobbesian dilemma — that the need for security trumps all — could not be given an absolute hand in the United States. And while we might disagree on how that works out in practice, that he was willing to walk down that path, and not merely be saying it as a platitude, meant something to me. He seemed to be speaking quite frankly, and not just a party or policy line. That’s a rare thing, I think, for former high-ranking public officials (and not so long out of office) who are giving public talks — usually they are quite dry, quite unsurprising. Hayden, whether you agree or disagree with him, is neither of these things.

- I wrote a preliminary analysis of NUKEMAP patterns up a few years ago, but my 2013 upgrade of the NUKEMAP dramatically increased the kinds of metrics I recorded, and the dataset has grown by an order of magnitude since then. Lest you worry, I take care to anonymize all of the data. There is also an “opt out” option regarding data logging on the NUKEMAP interface. [↩]