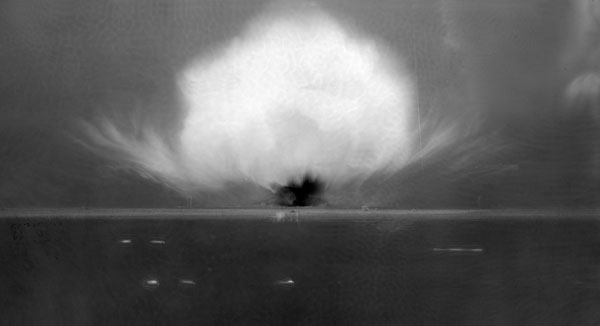

On the morning of August 6th, 1945 — 68 years ago today — the “Little Boy” atomic bomb was dropped on Hiroshima, Japan, by the American B-29 bomber, the Enola Gay.

In the last year, I’ve written about the bombing of Hiroshima quite a bit on here already in many different modes:

- The Hiroshima Leafleft — Did the United States “warn” Japan about the bomb?

- Hiroshima and Nagasaki in Color — Getting beyond the black and white

- The Decision to Use the Bomb: A Consensus View? — What do historians think today about the motivations for dropping the bomb?

- Who Knew about Radiation Sickness, and When? — Was radiation a consideration before dropping the bomb?

- Martian Perspectives — Leo Szilard and Edward Teller argue about whether to drop the bomb

- Going Back to Tinian — The island from which the attack was launched, today

- “We all aged ten years until the plane cleared the island” — The practical dangers of the first nuclear weapons

- The Week of the Atom Bomb — The headlines from a tumultuous week

- The Height of the Bomb — The grim logic behind the first nuclear targeting policy

- Hiroshima at 67: The Line We Crossed — The atomic bombs were morally identical to the firebombings, but that only complicates things

68 years later and we’re still grappling with the meaning of that legacy. We’re still debating it, still arguing about it, still researching it. It seems like one of those issues that will be hotly contested as long as people feel they have some stake in the outcome. As the generation that lived through World War II passes into history, I wonder how our views on this will evolve. Will they become more detached from the people and the events, and will that result in more hagiography (“Greatest Generation,” etc.) or its opposite? It will be interesting to see, in the decades to come.

Historical memory is skittish in its attentions. Our understanding of what was important about the present and past changes rapidly. Neal Stephenson, one of my favorite science fiction authors, has a wonderful conceit in his novel Anathem, whereby one group of scholars writes a history of their times once a year and then, every decade, forward it on to another group of scholars. They pare them down to the things that still seem important, and then, every century, forward ten of those on to another group of scholars. Those scholars (who are essentially isolated from all other news of the world) then pare out everything out that no longer seems important, and every thousand years, forward on their histories to another isolated group. I find this a wonderful illustration of the paring that time has on our understanding of the past, and how much that once seems so important is soon viewed as irrelevant.

One need only look through the newspapers that broke the news of Hiroshima (that is, those from August 7th, 1945, because of time zones and deadlines for morning editions) to see how much this is the case. Not all are as blatant as this sad tie-in from The Boston Daily Globe (August 7, 1945, page 4):

In defense of whomever chose that headline, they had to fill page space, and it’s clear they recognized how insipid this “new machine” was when nestled amongst war news. But there are other story decisions that are in some ways much more striking in retrospect.

Take, for example, the headlines above the fold of the Los Angeles Times (August 7):

Most of the headlines are devoted to the atomic bomb. Most of those about the bomb itself are either verbatim copies of, or derived from, the press releases and stories distributed by the Manhattan Project’s Public Relations Organization (yes, they had such a thing!). The one bomb story on there that is not from there is, tellingly, completely incorrect: a report that earthquakes in Southern California from the past three years were “the explosions of atomic bombs.” Um, not exactly. (There were large tests of chemical explosives at the Navy’s China Lake facility in Southern California, as part of Project Camel, but no atomic bomb tests out there, obviously.) The other big stories of the day are two deaths. One was of the Senator Hiram Johnson, an isolationist who bitterly opposed American foreign entanglements — there’s something appropriate with him passing away just as the United States was entering into a new era of such.

The other was the death of Major Richard Bong, a death so important at the time that its headline is only a tiny bit smaller than the news of Hiroshima itself. As the article explains, Richard I. Bong was a 24-year-old fighter pilot, the highest-scoring U.S. fighter ace of World War II, having shot down at least 40 confirmed Japanese planes. He died on familiar soil, as a test pilot in North Hollywood. His plane, an experimental P-80 Shooting Star, the United States’ first jet fighter, exploded a few minutes after takeoff. Bong attempted to abandon the plane, but it exploded and killed him.

Major Bong’s death got front billing in all of the major national newspapers. It was understandably most prominent in Los Angeles, where it was local news. But even the venerable New York Times, who had some of the thickest bomb coverage on account of their Manhattan Project-embedded reporter, William L. Laurence, slipped him on there, at the top, in the same size headline that they described the Trinity test:

Today, practically nobody has heard of Major Bong. I occasionally bring him up as an example of how many of the top news stories of today are going to be unheard of in a few years. The reaction I usually get is disbelief: 1. Surely “Major Bong” is a made-up name, and 2. Really, he shared the headlines with Hiroshima?

One gets this sensation frequently whenever one looks through the newspapers of the past. When my wife teaches her high school students about World War II, she prints out front pages of newspapers for various “famous events” of the day and has her students look at them in their entirety. It’s a useful exercise, not only because it makes the past feel real and relatable (hey, they wrote puff stories about new, dumb inventions, too!), but because it also emphasizes how disconnected the front pages of a newspaper might be with how we later think about a time or event, or with the later evaluation of a President, or with an understanding of a war. It is an exercise that also illustrates how a careful understanding of the past encourages a careful understand of the present — what story of today will be the Major Bong of tomorrow? And who is to say that Major Bong’s story shouldn’t be better known, and less overshadowed by other events of the time? There is nothing like steeping yourself in the news of a past period, to see how both strange and familiar it is, and to see how the grand and the mundane were always intermingled (as they are clearly today).

Personally, while I think Hiroshima is worth talking about — obviously — I think we put perhaps too much emphasis on it, and doing so remove it from its context. Other headlines on the same day talk about other bombing raids, including firebombing raids — the broader context of strategic bombing, and the targeting of civilians, of which the atomic bombs were only a part. I think, on the anniversary of Hiroshima, we should of course think about Hiroshima. But let’s not forget all of the other things that happened at that time — even on the same day — that get overshadowed when we hold up one event above all others.